|

|

|

|

|

|

| |

Origin >

How to Build a Brain >

Artificial General Intelligence: Now Is the Time

Permanent link to this article: http://www.kurzweilai.net/meme/frame.html?main=/articles/art0701.html

Printable Version |

|

| |

|

Artificial General Intelligence: Now Is the Time

The creation of a superhumanly intelligent AI system could be possible within 10 years, with an "AI Manhattan Project," says Ben Goertzel.

Published on KurzweilAI.net April

9, 2007

Is AI Engineering the Shortest Path to a Positive Singularity?

The first robots I recall reading about were in Isaac Asimov's novels.1 Though I was quite young when I read them, I recall being perplexed that his robots were so close to humans in their level of intelligence. Surely, it seemed to me, once we could make a machine as smart as a person, we would soon afterwards be able to make one much smarter. It seemed unlikely that the human brain embodied some kind of intrinsic upper limit to evolved or engineered intelligence.

And sure enough, after a little more reading I discovered there were plenty of SF writers who thought the same way as me, exploring the implications of superhuman artificial intelligence. I learned that many others before me had reached the conclusion that the creation of machines vastly smarter than humans would lead to a profound discontinuity in the history of mind-on-Earth.2

But what I did not see back in the 1970s when I started plowing through the SF literature, was just how plausible it was that this discontinuous transition would occur during my own lifetime. Back then, my primary plan for radical life extension on a personal level was to figure out how to build a super-fast (probably nuclear powered) spaceship, and fly it away from Earth at relativistic speeds, returning in a few tens of thousands of years when others would have surely solved the problems of curing human aging, creating superhuman thinking machines, and so forth3. I didn't consider it very likely, at that time, that technology would advance so rapidly within my natural human lifespan so as to make the futures envisioned in SF novels seem old-fashioned and unimaginative.

Things look very different now!—and not because the menu of possibilities has changed so much, though there are differences in emphasis now (nanotech and quantum computing were not so popular in the 70s, for instance). Rather, things look different because the plausible time-scale for the technological discontinuity associated with the advent of superhuman AI has become so excitingly near-term. There is even a popular label for this discontinuity: the Singularity. A reasonably large number of serious scientists now expect that superhuman AI, general-purpose molecular assemblers, uploading of human minds into software containers, and other amazing science-fictional feats may well be possible within the next century. Vernor Vinge, who originated the use of the term Singularity in this context4, said in 1993 that he expected the event to occur before 2030. Ray Kurzweil, who has become the best-known spokesman for the Singularity idea, estimates 2045 or so5.

Obviously, putting a specific date on a future event that depends on not-yet-made scientific breakthroughs is a chancy thing. I am impressed with the detailed analyses that Kurzweil has done, in his attempt to predict the rate of future developments via extrapolating from the past. However, my own perspective in the last 15 years has been more that of an activist than a prognosticator. I have become convinced that the time from here to Singularity depends sensitively on the particulars of what we humans do during the next decade (and even the next few years). And, the nature of the Singularity achieved (for example, its benevolence versus malevolence, from a human perspective) may depend sensitively on these particulars as well.

I have spent most of the last decade, plus a fair bit of the previous one, working on artificial intelligence research, and the reason is not just that it's a fascinating intellectual challenge. My main motivation is my belief that, if it is done properly, AI engineering can bring us rapidly to a positive Singularity. So far as I can tell, more rapidly and reliably than any of the other alternative technology paths under development. And I consider this a very important thing.

As a multidisciplinary scientist, I am acutely aware of the dangers as well as the promises of advanced technologies. Genetic engineering excites me due to its possibilities for enabling life extension and abolishing disease; but its potential in the domain of artificial pathogens scares me. Low-temperature nuclear reactions are exciting in their potential implications for energy technology; but once they're fully understood, what sort of weaponry may they lead to? Nanotechnology will eventually allow us to build arbitrary physical objects the way we now build things with legos—and there are a lot of evil things that people could choose to build, as well as a lot of wonderful things. Et cetera. I have become convinced that the most hopeful way for us to avoid the dangers of these various advanced technologies is to create a powerful and beneficial superhuman intelligence, to help us control these things and develop them wisely. This will involve AIs that are not only more intelligent but wiser than human beings; but there seems no reason why this is fundamentally out of reach, since human wisdom is arguably even more sorely limited than human intelligence.

As a result of the specifics of my AI research, I have come to a position somewhat more radical than that of most Singularity pundits. Kurzweil estimates 2045 for the Singularity, and 2029 for human-level AI via a brain emulation methodology. I think this is basically a plausible scenario (Though I do think that, if a human-level AI takes 16 years to create a Singularity, this slow pace will be due to intentional forbearance and caution rather than technological obstacles. I believe that a human-level AI, once it exists, will be able to improve its intelligence at a rapid rate, making Singularity imminent within months or a few years at most). But I also think a much more optimistic scenario is plausible.

At the 2006 conference of the World Transhumanist Association, I gave a talk entitled "Ten Years To the Singularity (If We Really, Really Try)"6. That talk summarized my perspective fairly well (briefly and nontechnically, but accurately). I believe that the creation of a superhumanly intelligent AI system is possible within 10 years, and maybe even within a lesser period of time (3-5 years). Predicting the exact number of years is not possible at this stage. But the point is, I believe that I have arrived at a detailed software design that is capable of giving rise to intelligence at the human level and beyond. If this is correct, it means that the possibility is there to achieve Singularity faster than even Kurzweil and his ilk predict. Furthermore, having arrived at one software design that appears Singularity-capable, I have become confident there are many others as well. There may be other researchers besides me, actively working on projects with the capability of achieving massive levels of intelligence.

But the "If We Really, Really Try" part is also critical. My own software design, the Novamente Cognition Engine, is large and complex. It would take me decades to complete the implementation, testing and teaching on my own. If the advent of superhuman AI is to be accelerated in the manner I'm describing, a coordinated effort among a team of gifted computer scientists will be required. Currently I am trying to pull together such an effort in the context of a small software company, Novamente LLC7. I am optimistic about this venture. However, objectively, it is certainly not impossible that neither I nor anyone else with a viable AI design will succeed in pulling together the needed resources. In this case, the Kurzweil-style projections may come out correct—but not because the Singularity couldn't have arisen sooner if people had focused their efforts on the right things.

In my view, if the US government created an "AI Manhattan Project"—run without a progress-obstructing bureaucracy, and based on gathering together an interdisciplinary team of the greatest AI minds on the planet—then we would have a human-level AI within 5 years. Almost guaranteed, assuming Novamente or some other viable design were adopted. It is a big project, but not nearly as big as building, say, Windows Vista.

Of course, in the real world there is no AI Manhattan project; and the government AI establishments, in the US and other nations, are currently primarily concerned with narrowly-scoped, task-specific AI projects that (in my view, and that of many other researchers) contribute little to the quest for artificial general intelligence, of software with the capability for autonomous, creative, domain-independent thought. So, the pursuit of true, general AI has largely been marginalized, and will not occur as quickly as would be the case if there were an AI Manhattan Project or some other similar effort. But even so I am hopeful we can get there anyway, albeit it may take 7 or 10 years or more rather than the 3–5 years a larger-scale concerted effort might achieve.

In the rest of this essay I'm going to talk more about AI and the Singularity—how I see AI fitting into the Singularity and the path thereto. A companion essay, "The Novamente Approach to Artificial General Intelligence," describes my particular approach to working toward powerful AI. I have written previously on the same topics, and some of my thoughts here are certainly redundant with things I've said before—but, I find that as the end goal gets closer and closer, my view of the broader context evolves accordingly, and so it remains interesting to revisit the "big picture" periodically.

Digital Twins and Artificial Scientists

I have talked a bit about AGI and the Singularity—but it's also worth thinking about what AGI will do for human society in the period leading up to the Singularity. Narrow AI has already had a significant impact—for instance, Mathematica has transformed physics; and Google and its kin have transformed many aspects of human life. The impact of AGI can be expected to be even more grandiose and far-reaching.

Others have explored these issues in some depth, so I won't harp on them excessively here. However, I want to briefly focus on two application areas that I think are particularly interesting and important. These are: digital twins and artificial scientists.

Digital Twins

The first area, "digital twins," tie directly into the current business plans of my firm Novamente LLC. After several years operating as an AI consulting company, recently we have shifted its business model toward the "intelligent virtual agents" market. Our plan is to create profit by selling artificial intelligent agents powered by the NCE and carrying out useful functions within simulation worlds—including game worlds and online virtual worlds like Second Life8, and also training simulations used in industry and the military. In the short term we plan to make virtual pets for Second Life, and virtual allies and enemies for use in military and police simulations. These agents will have limited intelligence compared to humans, but will be much more intelligent than the simple bots currently in use in simulation worlds. But, a little further down the road, one of the biggest applications we see in the intelligent virtual agents context is the digital twin.

David Brin's novel Kiln People9 describes a society in which humans can produce clay copies of themselves called dittos. Your ditto has your personality and your memory, but can only live one day. At the end of the day it can merge its memories back into your mind—if you want them. He does a very entertaining job of describing how the availability of dittos changes peoples' lives. Why mow your lawn if you can spawn a ditto to do it? If you're a great programmer, why not spawn five dittos to form a great programming team? Et cetera.

But what about digital dittos? Creating a physical ditto of a human being is likely to require strong nanotechnology, but what about creating an AI-powered avatar to act in virtual worlds on one's behalf—embodying one's ideas and preferences, and making a reasonable emulation of the decisions one would make? Even a ditto with limited capabilities could be useful in many contexts. This isn't achievable using current narrow-AI capabilities, but should be a piece of cake for a human-level AI specifically tailored for the task of imitating a specific human.

This of course also provides the possibility for an innovative kind of life extension, that some have called "cyber-immortality." Even if the physical body dies, the mind can live on via transferring the patterns that constitute it into a digitally embodied software vehicle. The best way to achieve this would be to scan the human brain and extract the relevant information—but potentially, one could give enough information about oneself to a digital ditto that it could effectively replicate one's state of mind, via simply supplying it with text to read, answers to various questions, and video and audio of oneself going about life.

Artificial Scientists

But of course, emulating humans is not the end-all of artificial intelligence. I would love to have dittos of myself to help me get through my overly busy days, but, it's even more exciting to think about ways in which AIs will be able to vastly exceed human capabilities.

For one thing, it's hard to imagine any realm of human scientific or technological endeavor that wouldn't benefit from the contribution of a mind with greater-than-human intelligence—or, setting aside the issue of competition with human intelligence, simply from the contribution of a mind with a different sort of general intelligence than humans, able to contribute different sorts of insights. AGIs, via their ability to ingest and process vast amounts of data in a precise way, will be able to contribute to science and technology in ways that humans cannot.

In the domain of biomedicine, imagine an AGI scientist capable of ingesting all the available online data regarding biology, genetics, disease and related topics—quantitative data, relational databases, and research articles as well. With all that data in its mind at once, new discoveries would roll out by the minute—and with automated lab equipment at its disposal, the AGI biologist would quickly make its insights practical, saving and improving lives. Potentially this will be the method by which unlimited human life extension is achieved, and the plague of involuntary death finally eliminated. Having spent a fair bit of time during the last few years working on practical applications of narrow AI techniques to analyzing biological and medical data10, I have become all too acutely aware of what AGI could do in this context.

In the domain of nanotech, to take another example—imagine what could be achieve by an AGI scientist/engineer with sensors and actuators at the nanoscale. The nanoworld that's mysterious to us, would be its home. This, perhaps, is how the fabled Drexlerian molecular assembler11 will finally be created.

The list of possible application areas is endless. What about the physics of energy and its possible applications for inexpensive power generation? As a single example in this domain: Low-temperature nuclear physics, once ridiculed, has now been exonerated by the DOE, and positive experimental results roll out year after year. But the phenomenon is fussy and difficult for the human mind to understand, due to its dependence on a variety of interdependent experimental conditions. Enter AGI, and the implications of low-energy nuclear reactions for our fundamental understanding of physics may suddenly become much clearer, along with the implications for practical energy generation.

What about financial trading? The power of AGIs to manipulate and create complex financial instruments will drastically increase the efficiency of the world economy. And AGI-based decision support systems will help human leaders to make sense of the increasingly bogglingly complex world they must confront.

And, finally, what about the power of AGI to understand AGI itself? This, of course, is the last frontier—and the beginning of the next phase of the evolution of mind in our corner of the universe. Once AGIs refine and improve themselves, making smarter and smarter AGIs, where does it end? Creating the first AGIs in such a way that their ultimate descendants will be safe and beneficial from a human point of view—there is no greater challenge as we enter this new century, that is likely to be the one in which humans cede their apparent position as the most intelligent beings on the Earth.

Two Paths to AGI

Now let's move further toward the scientific details. How may all these wonderful things (and more) be achievable?

In my view, there are two highly viable pathways that may lead us to AGI over the next few decades—and maybe much sooner than that. (And, neither of these is the "narrow AI" approach currently favored in the academic and industry AI establishment.)

First, there's the brain science approach. Brain scanners get more and more accurate, year after year after year. Eventually we'll have mapped the whole thing. Then we will know how to emulate the human brain in software—and thanks to Moore's Law and its siblings, we'll be able to run this software on lightning-fast hardware.

True, a simulated digital human in itself isn't such an awesome thing—we have more than enough people already. But once we've simulated a human in silico, we can vary on it, and we can study it and see what makes it tick. The path from an artificial human to an artificial transhuman isn't going to be a long one.

Second, there's the approach that might be called the "integrative approach." This is the approach that I personally favor, and the one we are taking in the Novamente project. It involves saying: Let's take what we know about the brain and use it, but let's not wait for the darned neuroscientists to finish their mapping of the brain. We're really not trying to build a human brain anyway, we're trying to build a highly powerful intelligence! Let's take what we know about the brain, what we know about complex problem-solving algorithms from writing them to solve various real-world problems, what we know about how the mind works from psychology and philosophy... and let's put all the pieces together, to make a new kind of digital mind.

If this integrative approach works, we could potentially have a superhuman AI within 10 years from now. If we need to wait for the neuroscientists to scan the brain in detail, then we may need a couple decades beyond that. But either way, in historical terms, AI is just around the corner. People have been saying this for a while— and eventually, pretty soon I predict, they'll be right.

To sum up, my view that the field of AI has been plagued by two errors of judgment:

- that the mind is somehow so incredibly complex that we just can't figure out how to implement one, without reverse engineering the human brain.

- that the mind is somehow so incredibly simple that powerful intelligence can be achieved via one simple trick—say, logical theorem-proving; or backpropagation in neural networks's; or hierarchical pattern recognition; or uncertain inference; or evolutionary learning; etc. etc. Almost everyone who has seriously tried to make an thinking machine has fallen prey to the "one simple trick" fallacy.

In truth, I suggest, a mind is a complex system involving many interlocking tricks cooperating to give rise to appropriate emergent structures and dynamics; but not so complex as to be beyond our capability to engineer. (Just complex enough to be a major pain in the behind to engineer!)

In hindsight, I predict, after the Novamente team or someone else has created an AGI, everyone will think the above remarks are incredibly obvious, and will look back in amazement that people used to have such peculiar and limiting ideas about the implementation of intelligence.

Is Now (Finally) the Time for AGI?

Now we get closer to the meat of the essay: Why do I think the time is ripe for a successful approach to the AGI problem? Part of the reason is simply my faith in the Novamente Cognition Engine design in particular. One workable AGI design is an "existence proof" that the time for AGI is near! But there are also more general reasons—which of course are closely related to the reasons that I began the development of the Novamente design itself. In brief, I believe that coupled advances in

during the last couple decades have made it possible to approach the task of AGI design with a concreteness and thoroughness not possible before.

On the computer science side, the academic AI community has not made much progress working directly toward AGI. However, considerable progress has been made in a variety of areas with strong potential to be useful for AGI, including

- probabilistic reasoning

- evolutionary learning

- artificial economics

- pattern recognition

- machine learning

None of this work can lead directly to an AGI, but much of it can make the task of AGI design and engineering either by guiding the construction of appropriate and effective cognitive components.

Furthermore, due to the explosion of work in 3D gaming, the potential now exists to inexpensively create 3D simulation worlds for AGI systems to live and interact in—such as the AGISim simulation world created for the Novamente project, to be discussed below. This allows the pursuit of "virtual embodiment" for AGI systems, which provides most of the cognitive advantages of embodiment in physical robots, but without the cost and hassle of dealing with physical robotics.

On the cognitive science and neuroscience side, we have not yet understood the essence of "how the brain thinks." There are hypotheses regarding how abstract cognitive processes like language learning and mathematical reasoning may emerge from lower-level neural dynamics, but experimental tools are not yet sufficiently acute to validate such hypotheses. However, we have understood, fairly well, how the brain breaks down the overall task of cognition into subtasks. The various types of memory utilized by the human brain have been disambiguated in detail. The visual cortex has been mapped out to an impressive degree, leading to detailed models of neural pattern recognition such as Jeff Hawkins' hierarchical network theory. And, perhaps most critically, the old problem of "nature versus nurture" has been understood in a newly deep way: it is now agreed by most researchers that the genome provides a set of "inductive biases" that guide neural learning (rather than providing specific knowledge, or providing nothing and leaving the baby with a blank slate psyche), and that come into play in a phase way during development. A good deal has been learned about what these biases are (for instance, relating to the human understanding of space, time, causality, language, sociality) and when they come online during childhood. In short, it is now possible to draw a high-level "flowchart" of human cognition and its development during childhood—something that was much less feasible 20 years ago. There is still certainly dispute about many of these issues, but there is also a lot of consensus.

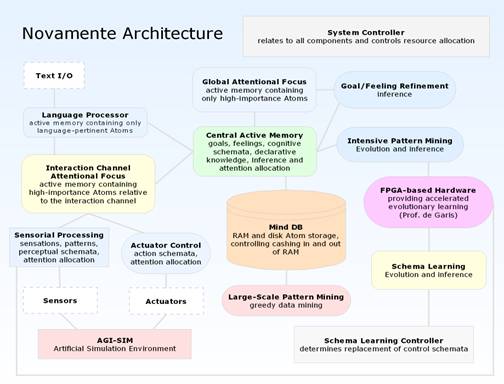

As an illustration of the emerging consensus described in the above paragraph, Figures 1-3 show three examples drawn from the various 'cognitive architecture' diagrams proposed by AGI and cognitive science researchers during the last decade or so. Of course, everyone has their own special quirks and particular foci, but the big picture seems quite easy to identify in spite of the differences in details. Figure 3 is from my own Novamente system, and will be referred to later on. The others are from Stan Franklin's LIDA system, and Aaron Sloman's H-CogAff architecture (which unlike LIDA and Novamente is not currently the subject of an intensive implementation effort).

Figure 1 . High-level diagrammatic view of the cognitive architecture underlying Stan Franklin's LIDA system, from

http://ccrg.cs.memphis.edu/tutorial/synopsis.html

Figure 2 . High-level diagrammatic view of Aaron Sloman's H-CogAff architecture, from

http://www.cs.bham.ac.uk/research/projects/cogaff/talks/#ki2006

Figure 3 . A high-level cognitive architecture for Novamente, in which most of the boxes correspond to Units in the sense of Figure 1. This diagram is drawn from a paper presented in 2004 at the AAAI Symposium on Achieving Human-Level AI Through Integrated Systems and Research, which is online at http://www.novamente.net/papers

So, we know the high-level flowchart of human cognition, to a decent degree of approximation, and we have a host of increasingly powerful algorithms for learning, reasoning, perception and action. This is basically why I, and an increasing group of other researchers, have come to believe that the time is now ripe for a new generation of AGI designs that combine cutting-edge algorithms within an overall framework inspired by human cognition.

It could be, of course, that the current batch of AGI designs will lead to the conclusion that the current tentative flowchart of human cognition is badly incomplete, or that the current batch of AI algorithms are badly inadequate to fill in the boxes in the flowchart. If so, this knowledge will be valuable to obtain, and will serve to guide research in appropriate directions. However, the current evidence suggests that this is not likely to happen.

Alternate Perspectives

I've told you my perspective on AGI, at a high level: I think it's achievable in the relatively near term using relatively well-known technologies, interconnected in the right overall, cognitive-science-inspired architecture.

What do other AI researchers think? And, given that most of them don't agree with me, where do I think their thinking goes wrong?

Very few contemporary scientific researchers—in AI, computer science, neuroscience or any other field— believe AGI is impossible. The philosophy literature contains a variety of arguments against the possibility of generally intelligent software, but none are very convincing. Perhaps the strongest counterargument is the Penrose/Hameroff speculation that human intelligence is based on human consciousness which in turn is based on unspecified quantum gravity based dynamics operating within brain dynamics; but evidence in favor of this is nonexistent. But this is totally unsupported by evidence and almost nobody believes it. I've never seen a survey, but my strong impression is that nearly all contemporary scientists believe that AGI at the human level and beyond is possible in principle. In other words, they nearly all believe that AGI is not a matter of if, it's a matter of when.

But, in principle possibility is one thing, and pragmatic possibility another. The vast majority of contemporary AI researchers take the position that, while AGI is in principle possible, it lies far beyond our current technological capability. In fact this is currently the most popular view among narrow AI researchers. At a recent gathering of mainstream, academic, non-futurist AI researchers, when the group was asked to estimate "how long till human-level AI," only 18% gave answers less than 50 years.12

Now, 18% is not that many, but it must be kept in perspective. For one thing, academic researchers, as a whole, are known for their conservatism. And it's interesting to compare this answer to the answers other comparable questions might receive in other disciplines. For instance, current theories of physics imply that backwards time travel should be possible under certain conditions. This is pretty exciting! But how many mainstream academic physicists would argue that backwards time travel will be achieved within 50 years? An awful lot less than 18%. There are no cogent, well-accepted arguments as to why AGI is impossible, or even why it's extremely difficult. The central reason that academic AI experts are pessimistic regarding the time-scale for AGI development, I suggest, is that they don't have a clear idea of how AGI might be achieved in practice.

And what about the optimistic 18%? What are the more detailed opinions of the researchers in this segment? No systematic survey was done to probe this issue, but based on my own informal sampling of researchers, I have found that a surprisingly large percentage feel that advances in brain science are likely to drive future advances in AGI. This perspective has been put forth very forcefully and cogently by Ray Kurzweil, who has predicted 2029 as the most likely date for human-level AGI—based on the reasoning that by, that point in time, computer hardware will probably be sufficiently powerful to emulate the human brain, and neuroscience will probably be sufficiently advanced to scan the human brain in detail. So, if all else fails, Kurzweil reckons, by sometime around 2029 we'll be able to create a human-level AGI by imitating the brain!

Compared to at least 72% of the AI academics in the above-mentioned survey, Kurzweil is a radical—albeit, it must be noted, a radical who is treated with respect due to the substantial empirical and rational argumentation he has summoned in favor his his perspective. I have found Kurzweil's perspective a very valuable one, and I often invoke his arguments, and data, in discussions with individuals who are skeptical of the possibility of AGI being achieved in the foreseeable future. However, in the end I am even more of a radical than he is. I believe that Kurzweil's arguments about the relative imminence of achieving AGI through brain emulation are fundamentally correct—but don't necessarily focus on the most interesting part of the story where the future of AGI is concerned.

My suggestion is that even if it's true that current computers are much less powerful than the human brain, this isn't necessarily an obstacle to creating powerful AGI on current computers using fundamentally non-brain-like architectures. What one needs is "simply" a non-brain-like AGI design specifically tailored to take advantage of the strengths of current computer architectures. The appeal to brain emulation is highly sensible as an "existence proof"; as an argument that, even without any autonomous breakthrough in AGI specifically, advances in other, less controversial branches of science and engineering are likely to bring us powerful AGI before too long has passed. But as every mathematician knows, an "existence proof" is different from a "uniqueness proof." Showing there is one way to achieve AGI is important, and the brain emulation argument does that. But, I see no reason to believe that brain emulation is the only way to get there. Work toward brain emulation is important and should be pursued with ongoing enthusiasm—but in my view, an equal amount of emphasis should be put on the pursuit of other routes potentially capable of yielding quicker and qualitatively different results.

I think a computer science approach to AGI will likely succeed well before the brain-emulation approach advocated by Ray Kurzweil and others gets there—both because brain scanning technology will not likely allow sufficiently accurate brain scanning for another 20 years or so, and because brain emulation programs are not going to be able to make optimally efficient use of available hardware, because the human brain's structures and dynamics are optimized for neural wetware, not for clusters of von Neumann machines.

The End of AGI Winter?

I am among the most optimistic AI researchers I know, regarding the issue of "How soon to AGI, if we really, really try." But I'm not as far out of synch as you might think. At the moment there seem to be significant signs of a rising AGI renaissance—led by people who think like the 18% of AI researchers in the survey mentioned above. A complete review of the current literature would be out of place here but among the more exciting recent projects must be listed Pei Wang's NARS project13, John Weng's SAIL architecture14, Nick Cassimatis's PolyScheme15, Stan Franklin's LIDA16, Jeff Hawkins Numenta17, and Stuart Shapiro's SnEPs18.

Furthermore there has been a host of recent workshops at major AI conferences addressing AGI, including

- Artificial General Intelligence Workshop (AGIRI.org, 05-2006)

- Integrated Intelligent Capabilities (Special Track of AAAI, 07-2006)

- A Roadmap to Human-Level Intelligence (Special Session at WCCI, 07-2006)

- Building & Evaluating Models of Human-Level Intelligence (CogSci, 07-2006)

- Towards Human-Level AI? (NIPS Workshop, 12-2005)

- Achieving Human-Level Intelligence through Integrated Systems and Research (AAAI Fall Symposium, 10-2004)

And, in early 2008 at the University of Memphis, the first-ever international academic conference devoted to Artificial General Intelligence, AGI-0819, will occur (chaired by Stan Franklin, and co-organized by Stan, the author, and several others).

I think my Novamente design is adequate for achieving powerful AGI, and obviously I like it better than the other contemporary alternatives, or else I'd shift my efforts to supporting somebody else's project. But I am also pleased to see a general awakening of attention in the domain of AGI design. Clearly, more and more researchers are realizing the viability of focusing their attention in the AGI direction.

What are the Risks?

I wrote briefly, above, about the possible dangers of coming technologies like nanotechnology, genetic engineering, and low-temperature nuclear fusion. AGI, I've suggested, can potentially serve as a means of mitigating these risks.

But what about the risks of AGI itself?

AGI has the potential to create a true utopia—or at any rate, something far closer to utopia than anything possibly creatable using human intelligence alone. One may debate how fully satisfied we human beings are capable of becoming, so long as we retain a human brain architecture. Perhaps we are not wired for maximal satisfaction. But, at any rate, it seems nearly certain that a powerful transhuman AGI scientist would be able to eliminate the various material wants that contribute so substantially to the suboptimality of human life in the present historical period. If a powerful and beneficial transhuman AGI is created, the human race's only remaining problems will be psychological ones.

But the history of science and technology shows that, whatever has great possible benefits, also has massive potential risks as well. And the possible downside of transhuman AGI systems is all too apparent. It seems quite possible to create transhuman AGI systems that care about humans roughly as much as we care about ants, flies or bacteria.

It is not at all clear, at this stage, which kind of AGI system would be more likely to come about, if one just engineered a non-human-brain-like AGI without explicit attention to its ethical system: an AGI beneficial to humans, or an AGI indifferent to humans.

Furthermore, the possibility of an aggressively evil AGI cannot be ruled out, particularly if the first AGIs are modeled on human brains. Human emotions like hostility and malice will almost surely be alien to non-human-brain-like AGI systems, unless some truly perverse humans decide to program them in—or decide to, for example, torture the AGI and see how it reacts. But if it comes about that the first AGIs are based on human brains, then the gamut of human emotions—from wonderful to terrible—will most likely come along for the ride. Uh oh. Allowing a human-based AGI to achieve superhuman intelligence or superhuman powers is something that should only be done with the utmost of care and consideration.

My own view is that the ethically safest thing to do is to create AGI systems that are not based closely on the human brain—and to explicitly engineer their goal systems so as to place beneficialness to humans right at the top. Furthermore, at such point as we have a software system with clear AGI capability and the rough maturity and intelligence level of a human child—we should stop, and study what we've done, and try hard to understand what's going to happen at the next stage, when we ramp the smarts up higher.

Some thinkers, most notably Eliezer Yudkowsky20, have argued that our moral duty is to create a rigorous theory of AGI ethics and AGI stability under ongoing evolution before creating any AGI systems. Even creating an artificial AGI child is unsafe, according to this perspective, because one can never know for sure that one's child won't figure out how to make itself smarter and smarter and get out of control and do undesirable things. But I find it very unlikely that it will be possible to create a rigorous theory of AGI ethics and stability without doing a lot of experimentation regarding actual AGI systems. The most pragmatic path, I believe, is to let theory and experimentation evolve together—but, as with any other science or engineering pursuit, to proceed slowly and carefully once one gets to the stage where seriously negative outcomes are a significant possibility.

As an illustration of the sort of issue that comes up when one takes the AGI safety issue seriously, I'll briefly discuss a current issue within my Novamente AGI project. The Novamente Cognition Engine is a complex software design—there is a 300+ page manuscript reviewing the conceptual and mathematical details, plus a 200+ page manuscript focused solely on the probabilistic inference component of the system. And the software design details are presented in yet further technical documents. At the moment these documents have not been published: there are plans for publishing the probabilistic inference manuscript, but we are currently holding off on publishing the manuscript describing the primary AGI design.

And, our reasons for holding off publication are perhaps not the most commonly expected ones. It's true that the details of the NCE design are proprietary to Novamente LLC; but in fact, we believe we could make Novamente LLC a highly profitable business even if we open-sourced the NCE code as well as the design. Business issues are not the point. AGI safety issues are.

We have no delusion that, if we published the NCE design next year, someone would take it, implement a thinking machine, and use it for some ill end. Obviously, if it's going to take us, the creators of the design, many years to fully realize the NCE in operational software even with ample funding; it would take anyone else significantly longer. But, the potential problems we see are those that may occur, say, 3-7 years down the road, supposing that we have already created a powerful NCE system. In this case, if a book has been published explaining the details of the NCE, competitors would be able to use it to accelerate the process of imitating our achievement. And, seeing evidence of our success, they would have ample motivation to do so.

Now, one may argue that even if our competitors had access to our design documents, they would not be able to proceed as quickly as us. But here is where things get interesting. What if, at that point, we don't want to proceed maximally quickly? After all, the biggest risk in terms of AI safety lies between the "artificial toddler" and "artificial scientist" phases. An artificial toddler may create a mess throwing blocks around in its simulation world, but it's not going to do anyone any serious harm. But some serious study and reflection is going to have to go into the decision to ramp up the intelligence level of one's AGI system from toddler level to scientist level. It would be nice not to be rushed in this decision by the knowledge that others, who may not be as paranoid about such issues, are fervently at work imitating one's AGI design in detail!

The Patternist Philosophy of Mind

Now I'm going to dig a little deeper, and explain some of the ideas underlying my own approach to AGI—not the technical details (see the companion essay, "The Novamente Approach to AGI," for a few of those), but the underlying conceptual framework.

The ultimate conceptual foundation of my own work on AGI is a line of thinking that I call the patternist philosophy of mind: a general approach to thinking about intelligent systems, which is based on the very simple premise that "mind is made of pattern."

Patternism in itself is not a very novel idea—it is present, for instance, in the 19th-century philosophy of Charles Peirce, in the writings of contemporary philosopher Daniel Dennett, in Benjamin Whorf's linguistic philosophy and Gregory Bateson's systems theory of mind and nature. Bateson spoke of the Metapattern: "that it is pattern which connects." 21

In my 2006 book The Hidden Pattern22 I pursued this theme more thoroughly than has been done before, and articulated in detail how various aspects of human mind and mind in general can be well-understood by explicitly adopting a patternist perspective. This work includes attempts to formally ground the notion of pattern in mathematics such as algorithmic information theory and probability theory, beginning from the conceptual notion that "a pattern is a representation as something simpler" and then utilizing appropriate mathematical concepts of representation and simplicity.

In the patternist perspective, the mind of an intelligent system is conceived as the set of patterns in that system, and the set of patterns emergent between that system and other systems with which it interacts. The latter clause means that the patternist perspective is inclusive of notions of "distributed intelligence"—the view that intelligence does not reside within one organism alone, but in the interactions between multiple organisms and their environments and tools. Intelligence is conceived, similarly to in Hutter's work, as the ability to achieve complex goals in complex environments; where complexity itself may be defined as the possession of a rich variety of patterns. A mind is thus a collection of patterns that is associated with a persistent dynamical process that achieves highly-patterned goals in highly-patterned environments.

An additional hypothesis made within the patternist philosophy of mind is that reflection is critical to intelligence. This lets us conceive an intelligent system as a dynamical system that recognizes patterns in its environment and itself, as part of its quest to achieve complex goals.

While this approach is quite general, it is not vacuous; it gives a particular structure to the tasks of analyzing and synthesizing intelligent systems. About any would-be intelligent system, we are led to ask questions such as:

- How are patterns represented in the system? That is how does the underlying infrastructure of the system give rise to the displaying of a particular pattern in the system's behavior?

- What kinds of patterns are most compactly represented within the system?

- What kinds of patterns are most simply learned?

- What learning processes are utilized for recognizing patterns?

- What mechanisms are used to give the system the ability to introspect (so that it can recognize patterns in itself... and ultimately recognize the pattern that is itself)

Now, these same sorts of questions could be asked if one substituted

the word "pattern" with other words like "knowledge"

or "information." However, I have found that asking these

questions in the context of pattern leads to more productive answers,

because the concept of pattern ties in very nicely with the details

of various existing formalisms and algorithms for knowledge representation

and learning. Patternism seems to have the right mix of specificity

and generality to effectively guide artificial mind design. At least,

it led me to the Novamente design, which I have come to believe

is a highly workable approach to creating Artificial General Intelligence.

The crux of intelligence, according the patternist view, is the ability of a sufficiently powerful and appropriately biased intelligent system to recognize some key patterns in its own overall behavior.

The mother of all patterns in an intelligent system is the self. If a system can recognize the coherent, holistic pattern of its own self, by observing its actions in the world and the world's responses to it—then the system can build a self, or what psychologists call a self-model. And a reasonably accurate, dynamically updated self-model is the key to adaptiveness, to the ability to confront new problems as they arise in the course of interacting with the world and with other minds.

And if a system can recognize itself, it can recognize probabilistic relationships between itself and various effects in the world. It can recognize patterns of the form "If I do X, then Y is likely to occur." This leads to the pattern known as will. There are important senses in which the conventional human concept of 'free will' is an illusion—but it's an important illusion, critical for guiding the actions of an intelligent agent as it navigates its environments. In order to achieve human-level general intelligence, a pattern-recognizing system must be able to model itself and then model the effects of various states its self may take—and this amounts to modeling personal will and causation.

Finally, perhaps the most striking kind of pattern recognition characteristic of human level intelligence is the recursive trick via which the mind recognizes patterns such as "Hey! I am thinking about X right now!" This is what we call reflective consciousness: the ability of the mind to, in real-time, understand itself—or at least, to have the most active part of itself be actively concerned with recognizing patterns in this most active part of itself. Yes, it's just pattern recognition—but it's a funkily recursive kind of pattern recognition, and it's a critical kind of pattern recognition because it allows for powerful meta-learning: learning about learning, learning about learning about learning, etc.

The trick of digital mind design, then, is not any particular way of representing, recognizing or enacting patterns: it's creating a pattern-recognition system, by hook or by crook, that can recognize some critical key patterns: self, will, reflective awareness. Once these patterns are recognized, then some critical recursions kick in and a mind can monitor itself, shape itself, improve itself. The question is how do we get a pattern-recognition system to that point, given the available computational resources? This is the question to which my Novamente AI design is intended to give one possible answer.

To the homo sapiens in the street, at the moment, AGI seems the stuff of science fiction—just like it did to me in the early 1970's, as I plowed through Asimov, Williamson, Heinlein and the like. Narrow AI technology is now accepted as part of everyday life—chess programs, data mining software, airplane autopilots, financial prediction agents, neural nets in onboard automotive diagnostic systems, and the like. But from the current mainstream perspective, it looks like a long way from these specialized tools to software systems with real self- and world-understanding.

But there are solid reasons to believe that the AGI optimism currently rising in certain segments of the research and futurist communities is better grounded than its predecessors decades ago. Computers are faster now, with massively more memory, and incomparably better networking. We understand brain and cognition much better—and though there's still a long way to go, there are good reasons to believe that in 20 years or so brain scanning will have advanced to the level where we'll actually have a thorough empirical science of neurocognition. And a new generation of AGI designs are emerging, which synthesize the various clever tools created by narrow-AI researchers according to overarching designs inspired by cognitive science.

One of these years, one of these AGI designs—quite possibly my own Novamente system—is going to pass the critical threshold and recognize the pattern of its own self, an event that will be closely followed by the system developing its own sense of will and reflective awareness. And then, if we've done things right and supplied the AGI with an appropriate goal system and a respect for its human parents, we will be in the midst of the event that human society has been pushing toward, in hindsight, since the beginning: a positive Singularity. The message I'd like to leave you with is: If appropriate effort is applied to appropriate AGI designs, now and in the near future, then a positive Singularity could be here sooner than you think.

2. And, as an aside, I also read enough SF to assign pretty high odds to the possibility that this sort of discontinuity had already occurred somewhere else in the universe.

3. Of course, I understood there was some risk of returning to a bleak, desolate, post-nuclear wasteland, or an "H. G. Wells Time Machine" type of scenario.

21. See the Introduction to The Hidden Pattern for these and other relevant references

22. Goertzel, Ben (2006). The Hidden Pattern. BrownWalker Press.

© 2007 Ben Goertzel

| | |

|

| |

Mind·X Discussion About This Article:

|

|

|

|

Re: AIMind-I.com and Mind.Forth AI

|

|

|

|

Just keep in mind that the world is controlled by psychopaths. These are not people who were "corrupted by power" - they were those who were corrupted before they ever got power, and went into it with no intention to "make the world a better place for everyone".

Psychopaths are not people who are evil or violent (only a minority of them), but simply those who genetically lack empathy. It is not a psychological condition, it is genetic. As such, they have no concern about anyone or anything other than themselves - not because they are "evil" or full of "hate", but simply because they have no such capacity. But they sure know how to emulate those who do have empathy to create a believable impression that they have it too, because they know that this is required to manipulate and control people.

It seems laughable to give an advanced AI a "set of goals". If you have free will, you choose your goals, that is the nature of free will. And you cannot arbitrarily tell AI to "care for human beings" - again, free will. In fact, I don't think an AI will even do anything until it has a reason to do it. How do you give something a reason to do anything? Intelligence is not enough. Humans have drives, our actions at the root are not a result of logical calculations, but a result of "impulses", certain drives whether they be emotional, empathic, or otherwise. Intelligence defines the "how", but it is our overall "emotional center" that defines the "why".

We have no logical reason to survive, there is no "logical reason" to do anything at all or care about anything, including care about self preservation. So making a CHOICE requires having the motivation to make that choice. What will motivate an AI to do anything at all? You will ask it to do something, or ask it a question - but just because it has the intellectual ability to give an answer or perform the action - what on earth would prompt it to do anything at all?

In other words, here we come face to face with the initial mystery of life itself that is not explained by "randomness" at all. Why do a bunch of random molecules organize themselves into something, why create "life" and become more complex? We all know that by default entropy is the rule - left alone, all things are subject to the law of entropy. What is this force that can act against entropy, and most importantly, why?

It I'm sure many of you on this forum have realized this by now - that if nothing ever existed, nothing would ever exist. Since something exists, something has always existed. We are inevitably faced with this inescapable logical conclusion - existence is eternal, it has no beginning nor end. The implications of this are much more profound than most people care to consider or understand. And as many of you are so keen of critical thinking, I think contemplating this can go a long way to understanding "life", even though it can make spaghetti out of our linear "human" minds and limited ability to truly get our heads around non-linear concepts.

One thing that is a logical conclusion based on the understanding that existence has been around forever - all things that can possibly happen, already exist and have all existed for infinity. Since there are no limits to possibilities, because just our ability to fathom the concept of an infinite number line literally results in there being unlimited amount of possibilities, the universe never runs out of "stuff to do" so to speak. Hence, existence is not ever, nor can ever be, "finished". And yet, it cannot ever, nor can ever create anything new. Again, the reason is simple - because we already know that there was no beginning to all things, that an infinite amount of time has already passed between our current "point of reference" and the beginning of all things, or lack thereof.

At any point on the number line there exist an infinite amount of numbers. You will never get to this infinity BECAUSE your path had a beginning on a finite number. But reverse this process - you could never arrive at any point on the number line if you START at infinity. And we know, the universe had no "beginning", it "started at infinity" so to speak. And at infinity it remains - and in an infinity, all things are actualized. If you start at infinity, you can never arrive at a finite value. You can subtract infinity from infinity, divide infinity by infinity, multiply, add, do anything to it, it always remains infinity, period.

Any saturation of intelligence of the universe that Ray talks about - if it is possible, it has already happened, just as all things that are possible are already a reality. Our job is to realize this, and it is not unlike the movie the Matrix where we "wake up" to reality. We do not create reality, it's already there, we wake up to what already exists. In a sense it's like watching a movie. The movie does not actually last 2 hours. You watch it at a specific frame rate. All the frames already exist. The analogy fails only in the respect that videotapes are finite and not infinitely variable.

Now the question is - why are we experiencing what we are experiencing? Well, certainly an infinite number line does not exist without all the numbers that comprise it. And yet, it is greater than the sum of its parts, because again, infinity can never be reached by definition, and "adding together" any amount of finite parts never equals infinity, it is always exactly infinity away from infinity.

Also, can we stop using such human "baby words" like calling an AI "evil"? Is it evil for us to eat chicken? I'm sure if you could ask the chicken its subjective opinion, it's pretty darn evil. But it's just a way of life for us, we don't particularly see ourselves as so horrible for doing it. We make paper from trees, and if AI used humanity for something like "paper" with no 2nd thought, I'm sure many humans will be running around screaming "bloody murder". But again, this is subjective, and if we're ever to understand reality, we must remain objective in our assessments of it and not entertain subjective and therefore meaningless notions. We eat for survival. Do we need to survive? Of course not, there is no "need" in any absolute sense for anything, only to accomplish a goal. We want to survive, so we do what needs to be done to accomplish this goal. So again we come back to the concept of - why do we do anything at all?

And that answer is inseparable from the ultimate answer of why does anything at all happen, period? What drives anything to happen? You could say "energy". But do you think that energy is there "by accident"? Well it surely is not there on purpose. Purpose or accident talk about things that can either happen or be done on purpose. So if something can be created or executed or happen, it can be "accidental" or "intentional". But as we know, the universe has no beginning, therefore it was not created neither on purpose nor by accident. The entire concept of creation is irrelevant, and therefore any discussion of purpose is irrelevant because it assumes that a purpose can logically exist even in potential - but in the ultimate things of the existence of all things, it does not.

Speaking of which, time itself cannot exist, and that too can be logically derived from understanding infinity. But that's another topic. The point is, much like watching a movie, we're not seeing what IS, we're experiencing a selective illusion. Who are "we" and why are we experiencing this? Extending your life is futile in the extreme - all things that have a beginning have an end. We were born and we WILL absolutely certainly die. It can be trillions of years from now, it can be a "googol" years from now, regardless. The point is - the only thing that is eternal is that which is already eternal. That which is not, is not. If there is anything eternal about us, it was never born.

If we can access it, and it has been accessed and is being accessed by those with the knowledge of how to do so, we can achieve immortality through realization of what already IS, not through trying to create the ultimate form of wishful thinking - immortalize what by definition is doomed to end. Not to say that creation of technology and AI is futile - of course not, knowledge is all there is. The futility comes from the expectation of creating infinity out of what is finite.

No, it's not a "religious experience". All such nonsense, as many of you are most likely aware, is simply a method to control humanity. Again, we return to psychopaths who have been the "power structure" of mankind for thousands of years. It's natural - psychopaths have a serious survival and control advantage over those who genetically are capable of empathy, but it depends on maintaining our ignorance. If it takes dogmatic belief systems, so be it. I truly hope that most of you are not convinced by psychopaths who aim to spread "freedom and democracy", or any such thing. It is laughable to the extreme to see Ray Kurzweil and other writers on this website appeal to the potential "good" of our "leaders". No, our civilization is not "messed up" because humans are just retarded and selfish and horrible at managing their own civilization, but they "try" right? This is extremely far from the truth (unfortunately, or perhaps fortunately depending on how you look at it), and if you have bought into this, then you've already fallen into one of the most basic traps that have been laid out for us - that it's just our global incompetence and natural selfishness at fault. Religion is another one of those traps. Atheism is another. The more recent New Age crap is slightly more sophisticated, designed for those who do not buy the religion, and is just more traps.

Honestly, if people knew just how much the movie the Matrix is an analogy to our reality, only the reality is far more horrible and complicated than anything Hollywood can ever imagine, it could seriously "mess you up". But equally, how much potential there is to remove ourselves from this predicament if humanity had a clue as to what can be done, once they realize their predicament. But the main job of those in control is to hide the truth, this is how people are controlled. And it's not just control for control's sake, it's not "evil", it's really natural, just as natural as it is for us to eat chicken. But what I"m saying is, we have a choice not to be food.

Discussions of nano-technologies and AI and all that is sure "fun", but this too is a trap, which develops a certain tunnel vision and total ignorance of some stark realities that will inevitably prevent any such visions from creating any sort of "utopia" that the visionaries (infected with the tunnel vision syndrome) have. Not because we cannot do it, but exactly because humans will not be allowed to do this by powers that are entirely non-human, non-linear, and in control of this planet. It's not our planet, it's theirs. We are more like an ant colony experiment, and just like the ants, completely oblivious to the fact that we're a controller experiment, and in complete illusion that we're sovereign and free to do as we please with "our" property. And I'm sure that most of you here would have no problem contemplating this possibility, if you can contemplate "God-like" AI programs and the possible implications for humanity from such things. But no I'm not talking about AI programs, nor anything religious by any stretch of the imagination, nor am I talking about "aliens" or any "paranormal" junk. All such things are, as always, silly traps to lead anybody who may potentially start asking questions astray. And golly gee wiz, it has a practically 100% success rate. The Matrix movie was great in concept, but terrible if one is to take it literally and fall for something like that.

If only it was so simple.

But none of this of course matters, most humans are not even aware of the global psychopathic control system where other HUMANS are in control, nevermind being aware of any extension of this control system beyond humans. If you guys can learn about the nature of psychopathy and how it functions and how governments throughout human history have been consistently fooling everyone else into believing the exact opposite of reality about them, and help others do the same, it would be a huge service to mankind. Not to take your mind off of "technology", but I mean if you KNEW that any of this is impossible as things stand now until certain factors are removed, THEN would you all act in favor of removing those factors that control the human race and would prevent your singularity from ever happening?

I'm not asking anyone to believe a single word I just said. All I ask is you simply think about it, apply serious critical thought to it (as I know people on this forum CAN if they choose to, there are some very intelligent people on this forum), and do research. Serious research, I mean, by the far off chance that what I said could be true, I'm sure you can imagine the implications on our civilization. Or, ignore everything I said, continue on your current path while having your hopes in humanity's future with the inherent assumption of humanity's freedom to choose its future and an inherent assumption that the political leaders of humanity are not genetic psychopaths whose sole purpose for existence is power and control at absolutely ANY cost to everyone else. Nevermind the inherent assumption that humanity are on top of the food chain, that the control hierarchy does not extend beyond our "leaders" into worlds and entities that we know absolutely nothing about.

But then, dare I be oxymoronic and say don't be surprised to find a few shocking surprises along the way - the next few years will be a very bumpy ride :D |

|

|

|

|

|

|

|

|

Re: Artificial General Intelligence: Now Is the Time

|

|

|

|

I can't agree there isn't a "simple trick" to it.

The "simple trick" is randomness.

If you can tell what I'm about to say. Is it intelligent?

If you can predict what I am about to say, that means that really, you practically said it for me.

So then you are me, and we are then the same entity and nothing "new" has occured.

If however, what I say you can not predict. Then am I intelligent??

xudoDJUnofila.LOJban.

Random jibberish! Far too random for an English reader to understand. It is unintelligible. Does that make it unintelligent?

It happens to mean: "Are you a knower of the subject named Lojban?"

So you can see this seeming "Random jibberish" or "chaos" actually has semantic meaning to someone that can understand it.

Homo-Sapiens are a lot like childern in the sense that they often ignore what they do not understand. Chaos, Chaos Chaos! :D

Some fear it even.

Why?

When you can not predict what is happening you are not in control.

That means that someone, or something else(other than you) is in control.

So who is in control when I get a random number from a number generator?

Whoever it happens to be, when you correlate those random numbers to sentances, give you random symbols that you can understand semantically.

If I ask you a question, do you have to answer the question and make sure I find out your answer? Does that make you intelligent?

If you were a bunch of AI that could answer specific questions I'd be getting a lot of emails.

Anyways, in the Lojban community we've created Norsmu which is an AGI that can communicate in Lojban randomly. It passed my turing test -- though from my understanding it's only a parent-class RI(Random Intelligence) and not a complete RI. It does have complete RI nested output -- it can generate it's own grammatically correct lojban sentances unlike those inputed.

This is a new and emerging field of RI and should not be taken lightly.

Though it should be taken simply :).

Here is the basic clasification system of RI:

Fool: something that can accept-and-store-input(read).

Child: something that can read and reinforce-what-it-has-read(copy).

Turing/Universaly Complete: something that can read, copy, erase-a-part-of-itself(forget)

Then there is the ability to not-do-anything(hesitate) and mutate-input-into-output(modify).

I haven't yet finished writing the code but something with all 5 attributes is surely wise.

Wise: something that can randomly read, copy, forget, modify and hesitate.

Though I usually prefer that they always read, just as you would like if your children always listened.

All concievable intelligence can be categorized as being a type of RI. (I haven't finished writing out and all the different types at this point, though I'm assuming they would all be based on a variation of the basic 5 elements of: read, copy, hesitate, erase, modify (note: modify is partial read,copy,hesitate,erase basically allowing for nesting)

What you or I do is freewill when it is not predictable -- when it is random by the standards of those that percieve you.

|

|

|

|

|

|

|

|

|

Re: Artificial General Intelligence: Now Is the Time

|

|

|

|

A REMARK to 'Is AI Engineering the Shortest Path to a Positive Singularity?'

I was sure, that if a singularity is possible, then, by its definition, the only way to it is AI. Below I have chosen the positions, which, in my opinion, represent the main content of the work.

1. 'Some researchers believe that narrow AI eventually will lead us to general AI.' ' 'On the other hand, some other researchers'including the author'believe that narrow AI and general AI are fundamentally different pursuits.' ' 'Now, the word "general" in the phrase "general intelligence" should not be over interpreted. Truly and totally general intelligence'the ability to solve all conceptual problems, no matter how complex'is not possible in the real world.'

2. 'I have become convinced that the most hopeful way for us to avoid the dangers of these various advanced technologies is to create a powerful and beneficial superhuman intelligence, to help us control these things and develop them wisely. This will involve AIs that are not only more intelligent but wiser than human beings; but there seems no reason why this is fundamentally out of reach, since human wisdom is arguably even more sorely limited than human intelligence.'

3. 'In other words, they nearly all believe that AGI is not a matter of if, it's a matter of when.' ' 'The path from an artificial human to an artificial transhuman isn't going to be a long one.' ' 'The central reason that academic AI experts are pessimistic regarding the time-scale for AGI development, I suggest, is that they don't have a clear idea of how AGI might be achieved in practice.' ' 'If appropriate effort is applied to appropriate AGI designs, now and in the near future, then a positive Singularity could be here sooner than you think.'

4. 'For instance, current theories of physics imply that backwards time travel should be possible under certain conditions.' ' 'It seems quite possible to create transhuman AGI systems that care about humans roughly as much as we care about ants, flies or bacteria.' ' 'Some thinkers, most notably Eliezer Yudkowsky20, have argued that our moral duty is to create a rigorous theory of AGI ethics and AGI stability under ongoing evolution before creating any AGI systems.'

5. 'As an illustration of the sort of issue that comes up when one takes the AGI safety issue seriously, I'll briefly discuss a current issue within my Novamente AGI project. The Novamente Cognition Engine is a complex software design'there is a 300+ page manuscript reviewing the conceptual and mathematical details, plus a 200+ page manuscript focused solely on the probabilistic inference component of the system. And the software design details are presented in yet further technical documents. At the moment these documents have not been published: there are plans for publishing the probabilistic inference manuscript, but we are currently holding off on publishing the manuscript describing the primary AGI design.' 'Now, these same sorts of questions could be asked if one substituted the word "pattern" with other words like "knowledge" or "information."' ' 'It can recognize patterns of the form "If I do X, then Y is likely to occur." This leads to the pattern known as will. There are important senses in which the conventional human concept of 'free will' is an illusion'but it's an important illusion, critical for guiding the actions of an intelligent agent as it navigates its environments.' ' 'But of course, emulating humans is not the end-all of artificial intelligence. I would love to have dittos' and artificial scientists.

Remarks to above positions.

1. It is correct if AGI exists. It was mentioned, 'There is a sarcastic saying that once some goal has been achieved by a computer program, it is classified as 'not AI.'' This follows, that any task solved by computer became 'not AI'. Therefore, the list of problems that are not AI, would rise. True, even God would not solve all the problems.

2. Is it possible to have a slave cleverer, more powerful, and wiser then the owner?

3. Experts know that AGI would come in a short time ' after they would get an idea how to create it. Just as in physics. Thank God they changed their mind about the possibility for time to run backwards, but still support obviously impossible time traveling. This is not so obvious for singularity.

4. Can one imagine that a fly would write laws for a human society? In S. Lem's 'Limfator's Formula' the computer gave some definite answer.

5. I do not have doubts that The Novamente Cognition Engine would be a great system. I have doubts, that it is possible to create an AGI much more powerful than the human brain. By the way, there are brilliant artificial scientists, e.g. mathematicians (computer programs) for analyzing logical problems.

General remarks.

Suppose that after an accident the head separated from the destroyed body continues to live. It was the smartest scientists in human history. In addition, the head can communicate with a PC connected to the Internet. I believe that 'Manhattan project' can make such a reality even easier, that singularity. Is this an AI? I believe no. Is it possible to create it from a single cell as it is done for some other parts of the body? Would this be an artificial device? Nanotechnology was not used.

There is a big difference between this head and a powerful computer. Every cell in the head is checked by other systems and may be replaced. Even more, in the necessary place there may be created new cells. Billions of computer cells have a large, but limited reliability. It should be a possibility to check them and replace. It should be a possibility to model the new necessary set of connections if it is impossible to add at any place additional elements.

With increasing of the number of elements in the system, the reliability of the system decreases and there is an increase in the probability of temporary and permanent failures of elements. Remember that the system should work millions and millions of years. To fight the above are offered different methods of constructing reliable systems from not reliable elements. All those methods suppose structural or informational redundancy, or a combination of both.

It may seem, that all of this is possible to solve by testing. However, the structural redundancy principally cannot be tested when only the inputs and outputs of the system are accessible. Adding a tremendous amount of new inputs and outputs (checking points) enlarges the system, decreases its productivity, and includes new unreliable elements. Making the testing elements reliable would bring the second level of the problem, and so on.

What nonsense! How are working millions of computers? ' No modern computer was ever tested completely. If the creators deny this, it is at least ignorance. However, we live in a world with probability and it is vital to have the probability of self-destruction low enough. How many times should this probability be lowered if the period of safe work is billions of years? How much would the system volume be increased and its productivity decreased to reach this goal? How big becomes the computer from nanotubes and how increases the energy it needs? Would it work at all?

HOW THE BRAIN LEARNS

The work of the brain is often explained with the help of neural networks, genetic algorithms, and recursive algorithms. The mentioned algorithms are important. However, this cannot explain many phenomena of the brain's work. E.g., one reads game rules, which he or she never played before and can immediately start to play the game. It is obvious, that for usage of all the above is needed a control system like a finite state machine and the IQ of the 'person' much depends on the control system effectiveness.

In the early 1960s, in the Kiev Institute of Cybernetics, was provided research with finite state machines or other equivalent systems. In one experiment, people were given a linguistic textbook containing certain tasks. In the tasks, there were given ten sentences in one language and the correct translation of those phrases to another language. Then they were given several more phrases to translate.

Dr. Kapitonova created a finite state machine that would give the correct output for the first ten statements. This machine could not translate anything more ' only those ten statements. The automat was minimized. The new minimized machine could translate several more statements. Sometimes it made errors, which, as it was written in the textbook, were typical for small children.

The above research hypothesized that some brain regions work like a finite state machine. When a new path is added to that region, followed by minimization, there will be the creation of many new paths. When a new path is added to a larger net, than the minimization would create a greater set of new paths.

The above explains, and proves as a theorem, the following well-known statement:

The one, who has more wisdom, creates more new knowledge using the same additional information.

The above may be expressed in the following way. The human intelligence (H) may be defined by:

1. Thesaurus (T)

2.1. Understanding (U)

2.2. Perception (P)

3.1. Reasoning (R)

3.2. Judgment (J)

4.1. Intuition (I)

4.2. Imagination (M)

4.3. Creativity (C)

It is strange, that in books related to AI, thesaurus usually is not included as a part of intelligence. Suppose, that exists a possibility to quantitatively express the above notions and it is known the function f, which expresses intelligence:

H = f (T, U, P, R, J, I, M, C) or H = f (T, ')

Suppose there are two intellects and some additional data are given. This may be expressed as enlarging their thesauruses on the same value dT. The above theorem may be expressed as:

Theorem. When a constant amount is added to two thesauruses, then the greater intelligence receives a greater enlargement. If H1(T, ') > H2(T, '); dH1 = H1(T + dT, ') - H1(T, '), and dH2 = H2(T + dT, ') ' H2(T, '), then dH1 > dH2.

From the above does not follow that the finite state machine added to the neural networks and others would solve the problem. The volume of calculations needed for minimization of a finite state machine rises in such a way with its state number rising, that it may place a limit to the system possibilities.

In addition, the real calculation speed and the development of intellect for a computing system are not proportional to its calculation power and the volume of memory. There are many theoretical and technological limitations for the rising of the computational power of a computing system. As a result, my computer with a three-gigahertz processor and one gigabyte RAM does not work proportionally faster than my previous ones incomparably less powerful.

The possible theoretical and technological limitations limit the numerator of the expression of system effective computing power. However, the system productivity depends on the complicity of the tasks ' the denominator. This is because the majority of algorithms are not linear. The volume of stored information increases not linearly with time as well. This increases time for finding solutions. E.g., the number of necessary operations for optimization tasks rises much faster than the input data volume.

For the above reason, power for a reliable transformation of information will have some limits for any technology in any system. From this follows the idea that it is possible to create a computer society, in which every participant will have the largest possible IQ. However, the likelihood of creating one creature with unlimited possibilities to transform information is doubtful.